Setup Pipeline

Templates#

RDBMS to BigQuery#

Load data of a table from RDBMS (Mysql, Postgres, ...) to BigQuery.

Support:

- Mysql

- Postgres

MongoDB to BigQuery#

Load data of a collection from MongoDB to BigQuery.

Genric Transformation#

Create dependencies for transformations.

If you have multiple transformations and want to run sequentially, you can setup a pipeline and define the order you want each transformation to run.

info

Each template will have slightly different configurations depend on datasource.

Configuration#

General Information#

Name

Description

Schedule Time: schedule will be in Ho Chi Minh Timezone (+7)

On demand: run mannually.

Basic options: Hourly, Daily, Weekly, Monthly, Yearly (this will run at the beginning of each time unit). Example:

- Hourly: start at minute 0 on every hour.

- Daily: start at 00:00 every day.

Custom option: this provide you a flexibility to set schedule.

Please find out here

note

If you choose

Custom, you have to validate your expression before saving pipeline.Minimum schedule interval: 10 minutes.

Source Information#

IMPORTANT

Source Datasource, Table Name, Load Strategy cannot be changed once pipeline is created.

Source Datasource: datasource (RDBMS or MongoDB) that is created in

Datasources.Table Name: input table name you want to extract data.

Extract Mode: how to extract data from source table

Full: extract all data from source table each time pipeline runs.

Incremental: extract a partial data from source table each time pipeline runs. This is preferred way to extract data from table with large data.

If this mode is selected, you will need to provide

Max fields(columns) that we use to keep track of data in source table.note

You can define multiple fields to be max fields (separated by comma

,) and change this option later.

Load Strategy

Merge: (similar to Upsert) when loading data to destination table, if records do not exist then insert new rows, otherwise update existings records based on

Key fields.If this strategy is selected, you will need to provide

Key fields(usually Primary Key for RDBMS tables or_idif it's MongoDB).Overwrite: when loading data to destination table, replace all data in the table with new data.

Append: when loading data to destination table, only insert data to destination table.

caution

Since BigQuery is Columnar Database, its table does not have primary key fundamentally, so it does not have any constraints. Thus, be careful when you choose this strategy because destination table might contains duplicate data.

There are some additional configurations for MongoDB, you can checkout here

Google Cloud Storage (GCS) Information#

Staging GCS Bucket: where data will be stored.

Staging GCS Prefix: prefix folder inside bucket, if provided, it will store data as follow

prefix_folder/table_name/staging_partitionotherwise, default apply as follow

table_name/staging_partition

BigQuery Staging Information#

- Staging Dataset: name of dataset where staging table will be located.

info

If dataset does not exists, when pipeline runs it will create a dataset with provided name. This also apply for Destination Dataset

Staging Table: name of table that will be created as external table that link to GCS folder.

Custom Staging Partition: (Hive partitioning) If you are familiar with

Hive Partitioningthen you can defined your own partition, otherwise just skip this option.If this option is checked, you will need to provide:

Staging Table Partition Columns: columns that are used for partitioning. (Separated by comma

,)Default:

dt, hourStaging Table Partition Format: format of above columns. (Separated by slash

/)Supported format: date, year, month, day, hour.

Default:

date/hourcaution

Please provide format of columns with the same order as columns.

Example

- Staging Table Partition Columns:

year, month, day, hour - Staging Table Partition Format:

year/month/day/hour

BigQuery Destination Information#

Dwh Datasource: BigQuery Connection that is created in

Datasources.Destination Dataset: name of dataset where destinaton table will be located.

Destination Table: name of table that will be created as native table.

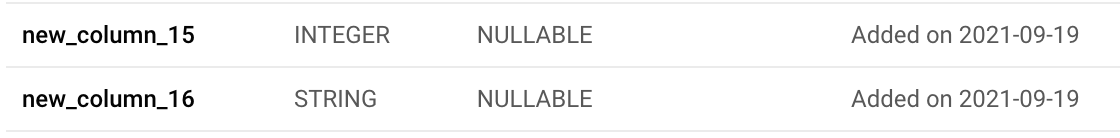

Auto Update Schema: If this option is enabled, whenever source table has new fields, new fields will be added to destination table, and if

Alert Channelhas been set, a message will be sent to that channel to notify that table schema has been updated with new fields.Added date will be updated to description of new fields.

If otherwise you do not want to enable this option, you will still receive a message about new fields and have to update destination table schema manually. Within 3 pipeline runs, if you have not updated destination table schema, we assumed that you have decided to ignore new fields and we will not send any messages about new fields for that table in the future unless you update destination table schema to match source table schema or enable this option.

note

Currently, BigQuery does not support update column type, so this option will only add new fields.

Is Partitioned Table: create destination table with a partition. If this option is checked, you will need to provide:

Destination Table Partition Column Field: column that will be used to partition table (Timestamp or date).

Destination Table Partition Column Type: type of partition.

Support:

Day, Hour, Month, Yearnote

BigQuery only support 4000 partitions per table. Recommend

Dayas the smallest partition type, you should not useHourunless that table is too big (events, streaming).Destination Table Require Partition Filter: By default, you can execute this query from a partitioned table

SELECT * FROM partitioned_tableHowever, with this option enabled you cannot perform above query. You have to add filter with partitioned column.

SELECT * FROM partitioned_table WHERE partitioned_column > '2021-09-01'This will be come handy when you have big table and users do not familiar with partitioning.